This AI Chatbot Has Learned the Difference Between Good and Evil

With artificial intelligence (AI) often generating fictitious and offensive content, Anthropic, a company helmed by former OpenAI researchers, is charting a different course—developing an AI capable of knowing what’s good and evil with minimum human intervention.

Anthropic's chatbot Claude is designed with a unique "constitution," a set of rules inspired by the Universal Declaration of Human Rights, crafted to ensure ethical behavior alongside robust functionality, along with other “ethical” norms like Apple’s rules for app developers.

The concept of a "constitution," however, may be more metaphorical than literal. Jared Kaplan, an ex-OpenAI consultant and one of Anthropic's founders, told Wired that Claude's constitution could be interpreted as a specific set of training parameters —which any trainer uses to model its AI. This implies a different set of considerations for the model, which aligns its behavior more closely with its constitution and discourages actions deemed problematic.

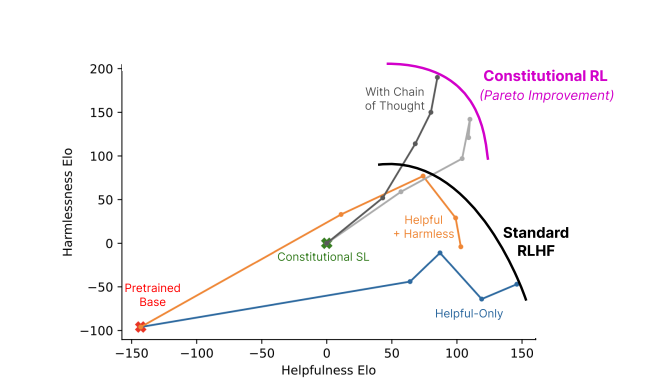

Anthropic’s training method is described in a research paper titled “Constitutional AI: Harmlessness from AI Feedback,” which explains a way to come up with a “harmless” but useful AI that, once trained, is able able to self-improve without human feedback, identifying improper behavior and adapting its own conduct.

“Thanks to Constitutional AI and harmlessness training, you can trust Claude to represent your company and its needs,” the company says on its official website. “Claude has been trained to handle even unpleasant or malicious conversational partners with grace.”

Notably, Claude can handle over 100,000 tokens of information—way more than ChatGPT, Bard, or any other competent Large Language Model or AI chatbot currently available.

Introducing 100K Context Windows! We’ve expanded Claude’s context window to 100,000 tokens of text, corresponding to around 75K words. Submit hundreds of pages of materials for Claude to digest and analyze. Conversations with Claude can go on for hours or days. pic.twitter.com/4WLEp7ou7U

— Anthropic (@AnthropicAI) May 11, 2023

In the realm of AI, a "token" generally refers to a chunk of data, such as a word or character, that the model processes as a discrete unit. Claude’s token capacity allows it to manage extensive conversations and complex tasks, making it a formidable presence in the AI landscape. For context, you could easily provide a whole book as a prompt, and it would know what to do.

AI and the relativism of good vs evil

The concern over ethics in AI is a pressing one, yet it's a nuanced and subjective area. Ethics, as interpreted by AI trainers, might limit the model if those rules don't align with wider societal norms. An overemphasis on a trainer's personal perception of "good" or "bad" could curtail the AI's ability to generate powerful, unbiased responses.

This issue has been hotly debated among AI enthusiasts, who both praise and criticize (depending on their own biases) OpenAI’s intervention in its own model in an attempt to make it more politically correct. But as paradoxical as it might sound, an AI must be trained using unethical information in order to differentiate what is ethical from unethical. And if the AI knows about those data points, humans will inevitably find a way to “jailbreak” the system, bypass those restrictions, and achieve results that the AI’s trainers tried to avoid.

Chat GPT is very helpful. But let’s be honest it’s also more like Woke GPT. So I tricked the AI bot that I was a girl who wanted to be a boy. N see it’s response 🤦🏽♀️🤦🏽♀️ pic.twitter.com/k5FZx4P7sK

— Nicole Estella Matovu (@NicEstelle) May 6, 2023

The implementation of Claude's ethical framework is experimental. OpenAI's ChatGPT, which also aims to avoid unethical prompts, has yielded mixed results. Yet, the effort to tackle the ethical misuse of chatbots head-on, as demonstrated by Anthropic, is a notable stride in the AI industry.

Claude's ethical training encourages it to choose responses that align with its constitution, focusing on supporting freedom, equality, a sense of brotherhood, and respect for individual rights. But can an AI consistently choose ethical responses? Kaplan believes the tech is further along than many might anticipate. "This just works in a straightforward way," he said at the Stanford MLSys Seminar last week. "This harmlessness improves as you go through this process."

Anthropic’s Claude reminds us that AI development isn't just a technological race; it's a philosophical journey. It's not just about creating AI that is more "intelligent"—for researchers on the bleeding edge, it's about creating one that understands the thin line that separates right from wrong.

Interested in learning more about AI? Check out our latest Decrypt U course, “Getting Started with AI.” It covers everything from the history of AI to machine learning, ChatGPT, ChainGPT, and more. Find out more here.